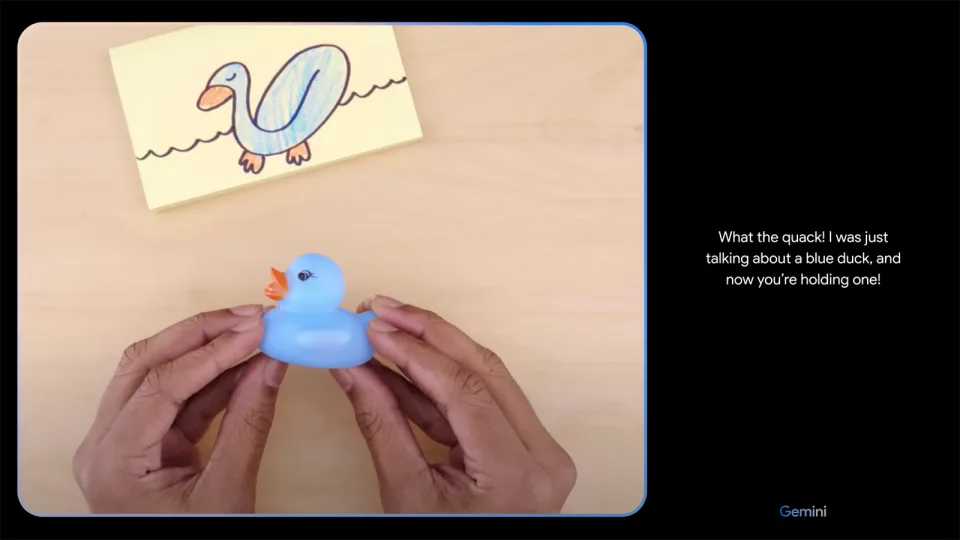

Google is heavily relying on its in-house GPT-4 competitor, Gemini, to the extent that it orchestrated portions of a recent demonstration video. According to an opinion piece in Bloomberg, Google has acknowledged that its video titled “Hands-on with Gemini: Interacting with multimodal AI” was not only edited to accelerate the outputs (as stated in the video description) but also featured a non-existent voice interaction between the human user and the AI.

Contrary to the implied seamless interaction, the actual demonstration involved the creation of “using still image frames from the footage and prompting via text,” rather than Gemini responding to, or predicting, real-time changes such as drawings or object alterations on the table. This revelation diminishes the perceived impressiveness of the video and raises concerns about the transparency of Gemini’s capabilities, especially given the absence of a disclaimer regarding the actual input method employed in the demonstration.

Unsurprisingly, Google refutes any allegations of wrongdoing in this matter, directing The Verge to a post on X written by Gemini’s co-lead, Oriol Vinyals. In the post, Vinyals asserts that “all the user prompts and outputs in the video are real,” and emphasizes that the team created the video with the intention of inspiring developers. Considering the current scrutiny on AI from both the industry and regulatory authorities, it might be prudent for the tech giant to exercise greater sensitivity in its presentations within this field.

Really happy to see the interest around our “Hands-on with Gemini” video. In our developer blog yesterday, we broke down how Gemini was used to create it. https://t.co/50gjMkaVc0

We gave Gemini sequences of different modalities — image and text in this case — and had it respond… pic.twitter.com/Beba5M5dHP

— Oriol Vinyals (@OriolVinyalsML) December 7, 2023